Improving Language Understanding by Generative Pre-Training¶

- paper:

https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf

Framework for achieving strong natural language understanding with a single task-agnostic model through generative pre-training and discriminative fine-tuning.

Textual entailment¶

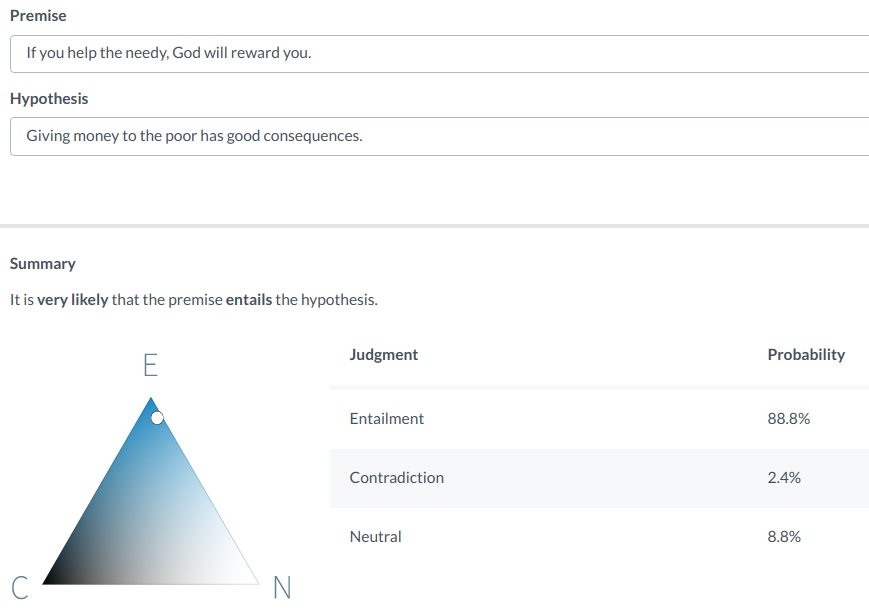

Premise: If you help the needy, God will reward you

Hypothesis: Giving money to the poor has good consequences.

Example from https://demo.allennlp.org/textual-entailment/MjMxNzU3MA==